Archive

Calendar Strategy: Month End

Calendar Strategy is a very simple strategy that buys an sells at the predetermined days, known in advance. Today I want to show how we can easily investigate performance at and around Month End days.

First let’s load historical prices for SPY from Yahoo Fiance and compute SPY perfromance at the month-ends. I.e. strategy will open long position at the close on the 30th and sell position at the close on the 31st.

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

tickers = spl('SPY')

data <- new.env()

getSymbols.extra(tickers, src = 'yahoo', from = '1980-01-01', env = data, set.symbolnames = T, auto.assign = T)

for(i in data$symbolnames) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data, align='keep.all', fill.gaps = T)

#*****************************************************************

# Setup

#*****************************************************************

prices = data$prices

n = ncol(prices)

models = list()

period.ends = date.month.ends(data$dates, F)

#*****************************************************************

# Strategy

#*****************************************************************

key.date = NA * prices

key.date[period.ends] = T

universe = prices > 0

signal = key.date

data$weight[] = NA

data$weight[] = ifna(universe & key.date, F)

models$T0 = bt.run.share(data, do.lag = 0, trade.summary=T, clean.signal=T)

Please note that above, in the bt.run.share call, I set do.lag parameter equal to zero (the default value for the do.lag parameter is one). The reason for default setting equal to one is due to signal (decision to trade) is derived using all information available today, so the position can only be implement next day. I.e.

portfolio.returns = lag(signal, do.lag) * returns = lag(signal, 1) * returns

However, in case of the calendar strategy there is no need to lag signal because the trade day is known in advance. I.e.

portfolio.returns = lag(signal, do.lag) * returns = signal * returns

Next, I created two functions to help with signal creation and strategy testing:

calendar.strategy <- function(data, signal, universe = data$prices > 0) {

data$weight[] = NA

data$weight[] = ifna(universe & signal, F)

bt.run.share(data, do.lag = 0, trade.summary=T, clean.signal=T)

}

calendar.signal <- function(key.date, offsets = 0) {

signal = mlag(key.date, offsets[1])

for(i in offsets) signal = signal | mlag(key.date, i)

signal

}

# Trade on key.date

models$T0 = calendar.strategy(data, key.date)

# Trade next day after key.date

models$N1 = calendar.strategy(data, mlag(key.date,1))

# Trade two days next(after) key.date

models$N2 = calendar.strategy(data, mlag(key.date,2))

# Trade a day prior to key.date

models$P1 = calendar.strategy(data, mlag(key.date,-1))

# Trade two days prior to key.date

models$P2 = calendar.strategy(data, mlag(key.date,-2))

# Trade: open 2 days before the key.date and close 2 days after the key.date

signal = key.date | mlag(key.date,-1) | mlag(key.date,-2) | mlag(key.date,1) | mlag(key.date,2)

models$P2N2 = calendar.strategy(data, signal)

# same, but using helper function above

models$P2N2 = calendar.strategy(data, calendar.signal(key.date, -2:2))

strategy.performance.snapshoot(models, T)

strategy.performance.snapshoot(models, control=list(comparison=T), sort.performance=F)

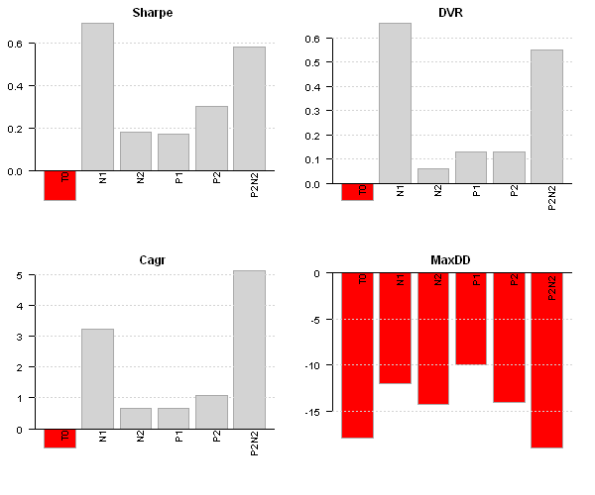

Above, T0 is a calendar strategy that buys on 30th and sells on 31st. I.e. position is only held on a month end day. P1 and P2 are two strategies that buy a day prior and two days prior correspondingly. N1 and N2 are two strategies that buy a day after and two days after correspondingly.

The N1 strategy, buy on 31st and sell on the 1st next month seems to be working best for SPY.

Finally, let’s look at the actual trades:

last.trades <- function(model, n=20, make.plot=T, return.table=F) {

ntrades = min(n, nrow(model$trade.summary$trades))

trades = last(model$trade.summary$trades, ntrades)

if(make.plot) {

layout(1)

plot.table(trades)

}

if(return.table) trades

}

last.trades(models$P2)

The P2 strategy enters position at the close 3 days before the month end and exits positions at the close 2 days before the month end. I.e. the performance is due to returns only 2 days before the month end.

With this post I wanted to show how easily we can study calendar strategy performance using the Systematic Investor Toolbox.

Next, I will demonstrate calendar strategy applications to variety of important dates.

To view the complete source code for this example, please have a look at the bt.calendar.strategy.month.end.test() function in bt.test.r at github.

Probabilistic Momentum

David Varadi has recently discussed an interesting strategy in the

Are Simple Momentum Strategies Too Dumb? Introducing Probabilistic Momentum post. David also provided the Probabilistic Momentum Spreadsheet if you are interested in doing computations in Excel. Today I want to show how you can test such strategy using the Systematic Investor Toolbox:

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

tickers = spl('SPY,TLT')

data <- new.env()

getSymbols(tickers, src = 'yahoo', from = '1980-01-01', env = data, auto.assign = T)

for(i in ls(data)) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data, align='remove.na', dates='2005::')

#*****************************************************************

# Setup

#******************************************************************

lookback.len = 60

prices = data$prices

models = list()

#*****************************************************************

# Simple Momentum

#******************************************************************

momentum = prices / mlag(prices, lookback.len)

data$weight[] = NA

data$weight$SPY[] = momentum$SPY > momentum$TLT

data$weight$TLT[] = momentum$SPY <= momentum$TLT

models$Simple = bt.run.share(data, clean.signal=T)

The Simple Momentum strategy invests into SPY if SPY’s momentum if greater than TLT’s momentum, and invests into TLT otherwise.

#***************************************************************** # Probabilistic Momentum #****************************************************************** confidence.level = 60/100 ret = prices / mlag(prices) - 1 ir = sqrt(lookback.len) * runMean(ret$SPY - ret$TLT, lookback.len) / runSD(ret$SPY - ret$TLT, lookback.len) momentum.p = pt(ir, lookback.len - 1) data$weight[] = NA data$weight$SPY[] = iif(cross.up(momentum.p, confidence.level), 1, iif(cross.dn(momentum.p, (1 - confidence.level)), 0,NA)) data$weight$TLT[] = iif(cross.dn(momentum.p, (1 - confidence.level)), 1, iif(cross.up(momentum.p, confidence.level), 0,NA)) models$Probabilistic = bt.run.share(data, clean.signal=T)

The Probabilistic Momentum strategy is using Probabilistic Momentum measure and Confidence Level to decide on allocation. Strategy invests into SPY if SPY vs TLT Probabilistic Momentum is above Confidence Level and invests into TLT is SPY vs TLT Probabilistic Momentum is below 1 – Confidence Level.

To make Strategy a bit more attractive, I added a version that can leverage SPY allocation by 50%

#***************************************************************** # Probabilistic Momentum + SPY Leverage #****************************************************************** data$weight[] = NA data$weight$SPY[] = iif(cross.up(momentum.p, confidence.level), 1, iif(cross.up(momentum.p, (1 - confidence.level)), 0,NA)) data$weight$TLT[] = iif(cross.dn(momentum.p, (1 - confidence.level)), 1, iif(cross.up(momentum.p, confidence.level), 0,NA)) models$Probabilistic.Leverage = bt.run.share(data, clean.signal=T) #***************************************************************** # Create Report #****************************************************************** strategy.performance.snapshoot(models, T)

The back-test results look very similar to the ones reported in the Are Simple Momentum Strategies Too Dumb? Introducing Probabilistic Momentum post.

However, I was not able to exactly reproduce the transition plots. Looks like my interpretation is producing more whipsaw when desired.

#*****************************************************************

# Visualize Signal

#******************************************************************

cols = spl('steelblue1,steelblue')

prices = scale.one(data$prices)

layout(1:3)

plota(prices$SPY, type='l', ylim=range(prices), plotX=F, col=cols[1], lwd=2)

plota.lines(prices$TLT, type='l', plotX=F, col=cols[2], lwd=2)

plota.legend('SPY,TLT',cols,as.list(prices))

highlight = models$Probabilistic$weight$SPY > 0

plota.control$col.x.highlight = iif(highlight, cols[1], cols[2])

plota(models$Probabilistic$equity, type='l', plotX=F, x.highlight = highlight | T)

plota.legend('Probabilistic,SPY,TLT',c('black',cols))

highlight = models$Simple$weight$SPY > 0

plota.control$col.x.highlight = iif(highlight, cols[1], cols[2])

plota(models$Simple$equity, type='l', plotX=T, x.highlight = highlight | T)

plota.legend('Simple,SPY,TLT',c('black',cols))

David thank you very much for sharing your great ideas. I would encourage readers to play with this strategy and report back.

To view the complete source code for this example, please have a look at the bt.probabilistic.momentum.test() function in bt.test.r at github.

Weekend Reading: F-Squared

Mebane Faber posted another interesting blog post: Building a Simple Sector Rotation on Momentum and Trend that caught my interest. Today I want to show how you can test such strategy using the Systematic Investor Toolbox:

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

data = new.env()

# load historical market returns

temp = get.fama.french.data('F-F_Research_Data_Factors', periodicity = '',download = T, clean = T)

ret = cbind(temp[[1]]$Mkt.RF + temp[[1]]$RF, temp[[1]]$RF)

price = bt.apply.matrix(ret / 100, function(x) cumprod(1 + x))

data$SPY = make.stock.xts( price$Mkt.RF )

data$SHY = make.stock.xts( price$RF )

# load historical sector returns

temp = get.fama.french.data('10_Industry_Portfolios', periodicity = '',download = T, clean = T)

ret = temp[[1]]

price = bt.apply.matrix(ret[,1:9] / 100, function(x) cumprod(1 + x))

for(n in names(price)) data[[n]] = make.stock.xts( price[,n] )

# align dates

data$symbolnames = c(names(price), 'SHY', 'SPY')

bt.prep(data, align='remove.na', dates='2000::')

# back-test dates

bt.dates = '2001:04::'

#*****************************************************************

# Setup

#******************************************************************

prices = data$prices

n = ncol(data$prices)

models = list()

#*****************************************************************

# Benchmark Strategies

#******************************************************************

data$weight[] = NA

data$weight$SPY[1] = 1

models$SPY = bt.run.share(data, clean.signal=F, dates=bt.dates)

weight = prices

weight$SPY = NA

weight$SHY = NA

data$weight[] = NA

data$weight[] = ntop(weight[], n)

models$EW = bt.run.share(data, clean.signal=F, dates=bt.dates)

#*****************************************************************

# Code Strategies

# http://www.mebanefaber.com/2013/12/04/square-root-of-f-squared/

#******************************************************************

sma = bt.apply.matrix(prices, SMA, 10)

# create position score

position.score = sma

position.score[ prices < sma ] = NA

position.score$SHY = NA

position.score$SPY = NA

# equal weight allocation

weight = ntop(position.score[], n)

# number of invested funds

n.selected = rowSums(weight != 0)

# cash logic

weight$SHY[n.selected == 0,] = 1

weight[n.selected == 1,] = 0.25 * weight[n.selected == 1,]

weight$SHY[n.selected == 1,] = 0.75

weight[n.selected == 2,] = 0.5 * weight[n.selected == 2,]

weight$SHY[n.selected == 2,] = 0.5

weight[n.selected == 3,] = 0.75 * weight[n.selected == 3,]

weight$SHY[n.selected == 3,] = 0.25

# cbind(round(100*weight,0), n.selected)

data$weight[] = NA

data$weight[] = weight

models$strategy1 = bt.run.share(data, clean.signal=F, dates=bt.dates)

#*****************************************************************

# Create Report

#******************************************************************

strategy.performance.snapshoot(models, one.page = T)

Mebane thank you very much for sharing your great ideas. I would encourage readers to play with this strategy and report back.

Please note that I back-tested the strategy using the monthly observations. The strategy’s draw-down is around 17% using monthly data. If we switch to the daily data, the strategy’s draw-down goes to around 22%. There was one really bad month in 2002.

To view the complete source code for this example, please have a look at the bt.mebanefaber.f.squared.test() function in bt.test.r at github.

Averaged Input Assumptions and Momentum

Today I want to share another interesting idea contributed by Pierre Chretien. Pierre suggested using Averaged Input Assumptions and Momentum to create reasonably quiet strategy. The averaging techniques are used to avoid over-fitting any particular frequency.

To create Averaged Input Assumptions we combine returns over different look-back periods, giving more weight to the recent returns, to form overall Input Assumptions.

create.ia.averaged <- function(lookbacks, n.lag) {

lookbacks = lookbacks

n.lag = n.lag

function(hist.returns, index=1:ncol(hist.returns), hist.all)

{

nperiods = nrow(hist.returns)

temp = c()

for (n.lookback in lookbacks)

temp = rbind(temp, hist.returns[(nperiods - n.lookback - n.lag + 1):(nperiods - n.lag), ])

create.ia(temp, index, hist.all)

}

}

To create Averaged Momentum we take a look-back weighted avaerage of momentums computed over different look-back periods.

momentum.averaged <- function(prices,

lookbacks = c(20,60,120,250) , # length of momentum look back

n.lag = 3

) {

momentum = 0 * prices

for (n.lookback in lookbacks) {

part.mom = mlag(prices, n.lag) / mlag(prices, n.lookback + n.lag) - 1

momentum = momentum + 252 / n.lookback * part.mom

}

momentum / len(lookbacks)

}

Next let’s compare using historical Input Assumptions vs Averaged Input Assumptions and Momentum vs Averaged Momentum. I will consider Absolute Momentum (not cross sectional), for more information about relative and absolute momentum, please see

Absolute Momentum: A Simple Rule-Based Strategy and Universal Trend-Following Overlay, Gary Antonacci, 2013

Generalized Momentum and Flexible Asset Allocation (FAA): An Heuristic Approach by W.J. Keller and H. S. Van Putten 2012

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

# 10 funds

tickers = spl('Us.Eq = VTI + VTSMX,

Eurpoe.Eq = IEV + FIEUX,

Japan.Eq = EWJ + FJPNX,

Emer.Eq = EEM + VEIEX,

Re = RWX + VNQ + VGSIX,

Com = DBC + QRAAX,

Gold = GLD + SCGDX,

Long.Tr = TLT + VUSTX,

Mid.Tr = IEF + VFITX,

Short.Tr = SHY + VFISX')

start.date = 1998

dates = paste(start.date,'::',sep='')

data <- new.env()

getSymbols.extra(tickers, src = 'yahoo', from = '1980-01-01', env = data, set.symbolnames = T, auto.assign = T)

for(i in data$symbolnames) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data, align='keep.all', dates=paste(start.date-2,':12::',sep=''), fill.gaps = T)

#*****************************************************************

# Setup

#******************************************************************

prices = data$prices

n = ncol(prices)

nperiods = nrow(prices)

periodicity = 'months'

period.ends = endpoints(prices, periodicity)

period.ends = period.ends[period.ends > 0]

max.product.exposure = 0.6

#*****************************************************************

# Input Assumptions

#******************************************************************

lookback.len = 40

create.ia.fn = create.ia

# input assumptions are averaged on 20, 40, 60 days using 1 day lag

ia.array = c(20,40,60)

avg.create.ia.fn = create.ia.averaged(ia.array, 1)

#*****************************************************************

# Momentum

#******************************************************************

universe = prices > 0

mom.lookback.len = 120

momentum = prices / mlag(prices, mom.lookback.len) - 1

mom.universe = ifna(momentum > 0, F)

# momentum is averaged on 20,60,120,250 days using 3 day lag

mom.array = c(20,60,120,250)

avg.momentum = momentum.averaged(prices, mom.array, 3)

avgmom.universe = ifna(avg.momentum > 0, F)

#*****************************************************************

# Algos

#******************************************************************

min.risk.fns = list(

EW = equal.weight.portfolio,

MV = min.var.portfolio,

MCE = min.corr.excel.portfolio,

MV.RSO = rso.portfolio(min.var.portfolio, 3, 100, const.ub = max.product.exposure),

MCE.RSO = rso.portfolio(min.corr.excel.portfolio, 3, 100, const.ub = max.product.exposure)

)

#*****************************************************************

# Code Strategies

#******************************************************************

make.strategy.custom <- function(name, create.ia.fn, lookback.len, universe, env) {

obj = portfolio.allocation.helper(data$prices,

periodicity = periodicity,

universe = universe,

lookback.len = lookback.len,

create.ia.fn = create.ia.fn,

const.ub = max.product.exposure,

min.risk.fns = min.risk.fns,

adjust2positive.definite = F

)

env[[name]] = create.strategies(obj, data, prefix=paste(name,'.',sep=''))$models

}

models <- new.env()

make.strategy.custom('ia.none' , create.ia.fn , lookback.len, universe , models)

make.strategy.custom('ia.mom' , create.ia.fn , lookback.len, mom.universe , models)

make.strategy.custom('ia.avg_mom' , create.ia.fn , lookback.len, avgmom.universe, models)

make.strategy.custom('avg_ia.none' , avg.create.ia.fn, 252 , universe , models)

make.strategy.custom('avg_ia.mom' , avg.create.ia.fn, 252 , mom.universe , models)

make.strategy.custom('avg_ia.avg_mom' , avg.create.ia.fn, 252 , avgmom.universe, models)

#*****************************************************************

# Create Report

#*****************************************************************

strategy.snapshot.custom <- function(models, n = 0, title = NULL) {

if (n > 0)

models = models[ as.vector(matrix(1:len(models),ncol=n, byrow=T)) ]

layout(1:3)

plotbt(models, plotX = T, log = 'y', LeftMargin = 3, main = title)

mtext('Cumulative Performance', side = 2, line = 1)

plotbt.strategy.sidebyside(models)

barplot.with.labels(sapply(models, compute.turnover, data), 'Average Annual Portfolio Turnover', T)

}

# basic vs basic + momentum => momentum filter has better results

models.final = c(models$ia.none, models$ia.mom)

strategy.snapshot.custom(models.final, len(min.risk.fns), 'Momentum Filter')

# basic vs basic + avg ia => averaged ia reduce turnover

models.final = c(models$ia.none, models$avg_ia.none)

strategy.snapshot.custom(models.final, len(min.risk.fns), 'Averaged Input Assumptions')

# basic + momentum vs basic + avg.momentum => mixed results for averaged momentum

models.final = c(models$ia.mom, models$ia.avg_mom)

strategy.snapshot.custom(models.final, len(min.risk.fns), 'Averaged Momentum')

# basic + momentum vs avg ia + avg.momentum

models.final = c(models$ia.mom, models$avg_ia.avg_mom)

strategy.snapshot.custom(models.final, len(min.risk.fns), 'Averaged vs Base')

Above, I compared results for the following 4 cases:

1. Adding Momentum filter: all algos perfrom better

2. Input Assumptions vs Averaged Input Assumptions: returns are very similar, but using Averaged Input Assumptions helps reduce portfolio turnover.

3. Momentum vs Averaged Momentum: returns are very similar, but using Averaged Momentum increases portfolio turnover.

4. historical Input Assumptions + Momentum vs Averaged Input Assumptions + Averaged Momentum: results are mixed, no consistent advantage of using Averaged methods

Overall, the Averaged methods is a very interesting idea and I hope you will experiemtn with it and share your findings, like Pierre. Pierre, again thank you very much for sharing.

The full source code and example for the bt.averaged.test() function is available in bt.test.r at github.

Fast Threshold Clustering Algorithm (FTCA) test

Today I want to share the test and implementation for the Fast Threshold Clustering Algorithm (FTCA) created by David Varadi. This implementation was developed and contributed by Pierre Chretien, I only made minor updates.

Let’s first replicate the results from the Fast Threshold Clustering Algorithm (FTCA) post:

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data for ETFs

#******************************************************************

load.packages('quantmod')

tickers = spl('XLY,XLP,XLE,XLF,XLV,XLI,XLB,XLK,XLU')

data <- new.env()

getSymbols(tickers, src = 'yahoo', from = '1900-01-01', env = data, auto.assign = T)

for(i in ls(data)) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data, align='keep.all')

#*****************************************************************

# Helper function to compute portfolio allocation additional stats

#******************************************************************

portfolio.allocation.custom.stats.clusters <- function(x,ia) {

return(list(

clusters.FTCA = cluster.group.FTCA(0.5)(ia)

))

}

#*****************************************************************

# Find clusters

#******************************************************************

periodicity = 'months'

lookback.len = 252

obj = portfolio.allocation.helper(data$prices,

periodicity = periodicity, lookback.len = lookback.len,

min.risk.fns = list(EW=equal.weight.portfolio),

custom.stats.fn = portfolio.allocation.custom.stats.clusters

)

clusters = obj$clusters.FTCA$EW

clusters['2012:05::']

The clusters are stable and match David’s results

XLB XLE XLF XLI XLK XLP XLU XLV XLY

2012-05-31 1 1 1 1 1 1 1 1 1

2012-06-29 1 1 1 1 1 1 1 1 1

2012-07-31 1 1 1 1 1 1 1 1 1

2012-08-31 1 1 1 1 1 1 1 1 1

2012-09-28 1 1 1 1 1 1 1 1 1

2012-10-31 1 1 1 1 1 1 1 1 1

2012-11-30 2 2 2 2 2 2 1 2 2

2012-12-31 2 2 2 2 2 2 1 2 2

2013-01-31 2 2 2 2 2 2 1 2 2

2013-02-28 1 1 1 1 1 1 1 1 1

2013-03-28 1 1 1 1 1 1 1 1 1

2013-04-30 1 1 1 1 1 1 1 1 1

2013-05-31 1 1 1 1 1 1 1 1 1

2013-06-28 1 1 1 1 1 1 1 1 1

2013-07-31 1 1 1 1 1 1 1 1 1

2013-08-30 1 1 1 1 1 1 1 1 1

2013-09-30 1 1 1 1 1 1 1 1 1

2013-10-31 1 1 1 1 1 1 1 1 1

2013-11-26 1 1 1 1 1 1 1 1 1

Next let’s compare the Cluster Portfolio Allocation Algorithm using K-means and FTCA:

#***************************************************************** # Code Strategies #****************************************************************** obj = portfolio.allocation.helper(data$prices, periodicity = periodicity, lookback.len = lookback.len, min.risk.fns = list( C.EW.kmeans = distribute.weights(equal.weight.portfolio, cluster.group.kmeans.90), C.EW.FTCA = distribute.weights(equal.weight.portfolio, cluster.group.FTCA(0.5)) ) ) models = create.strategies(obj, data)$models #***************************************************************** # Create Report #****************************************************************** barplot.with.labels(sapply(models, compute.turnover, data), 'Average Annual Portfolio Turnover')

Both clustering algorithms produced very similar results. One noticeable difference is turnover. Since the Fast Threshold Clustering Algorithm (FTCA) produced more stable groups, it had smaller turnover.

The full source code and example for the cluster.group.FTCA() function is available in strategy.r at github.