Archive

Adjusted Momentum

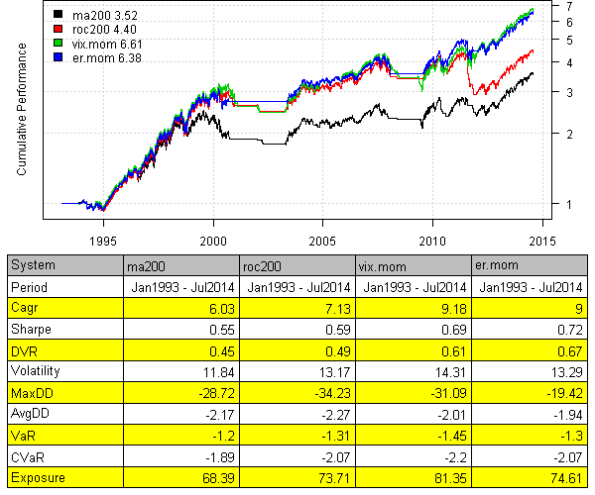

David Varadi has published two excellent posts / ideas about cooking with momentum:

I just could not resist the urge to share these ideas with you. Following is implementation using the Systematic Investor Toolbox.

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

tickers = spl('SPY,^VIX')

data <- new.env()

getSymbols(tickers, src = 'yahoo', from = '1980-01-01', env = data, auto.assign = T)

for(i in data$symbolnames) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data, align='remove.na', fill.gaps = T)

VIX = Cl(data$VIX)

bt.prep.remove.symbols(data, 'VIX')

#*****************************************************************

# Setup

#*****************************************************************

prices = data$prices

models = list()

#*****************************************************************

# 200 SMA

#******************************************************************

data$weight[] = NA

data$weight[] = iif(prices > SMA(prices, 200), 1, 0)

models$ma200 = bt.run.share(data, clean.signal=T)

#*****************************************************************

# 200 ROC

#******************************************************************

roc = prices / mlag(prices) - 1

data$weight[] = NA

data$weight[] = iif(SMA(roc, 200) > 0, 1, 0)

models$roc200 = bt.run.share(data, clean.signal=T)

#*****************************************************************

# 200 VIX MOM

#******************************************************************

data$weight[] = NA

data$weight[] = iif(SMA(roc/VIX, 200) > 0, 1, 0)

models$vix.mom = bt.run.share(data, clean.signal=T)

#*****************************************************************

# 200 ER MOM

#******************************************************************

forecast = SMA(roc,10)

error = roc - mlag(forecast)

mae = SMA(abs(error), 10)

data$weight[] = NA

data$weight[] = iif(SMA(roc/mae, 200) > 0, 1, 0)

models$er.mom = bt.run.share(data, clean.signal=T)

#*****************************************************************

# Report

#******************************************************************

strategy.performance.snapshoot(models, T)

Please enjoy and share your ideas with David and myself.

To view the complete source code for this example, please have a look at the

bt.adjusted.momentum.test() function in bt.test.r at github.

Calendar Strategy: Month End

Calendar Strategy is a very simple strategy that buys an sells at the predetermined days, known in advance. Today I want to show how we can easily investigate performance at and around Month End days.

First let’s load historical prices for SPY from Yahoo Fiance and compute SPY perfromance at the month-ends. I.e. strategy will open long position at the close on the 30th and sell position at the close on the 31st.

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

tickers = spl('SPY')

data <- new.env()

getSymbols.extra(tickers, src = 'yahoo', from = '1980-01-01', env = data, set.symbolnames = T, auto.assign = T)

for(i in data$symbolnames) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data, align='keep.all', fill.gaps = T)

#*****************************************************************

# Setup

#*****************************************************************

prices = data$prices

n = ncol(prices)

models = list()

period.ends = date.month.ends(data$dates, F)

#*****************************************************************

# Strategy

#*****************************************************************

key.date = NA * prices

key.date[period.ends] = T

universe = prices > 0

signal = key.date

data$weight[] = NA

data$weight[] = ifna(universe & key.date, F)

models$T0 = bt.run.share(data, do.lag = 0, trade.summary=T, clean.signal=T)

Please note that above, in the bt.run.share call, I set do.lag parameter equal to zero (the default value for the do.lag parameter is one). The reason for default setting equal to one is due to signal (decision to trade) is derived using all information available today, so the position can only be implement next day. I.e.

portfolio.returns = lag(signal, do.lag) * returns = lag(signal, 1) * returns

However, in case of the calendar strategy there is no need to lag signal because the trade day is known in advance. I.e.

portfolio.returns = lag(signal, do.lag) * returns = signal * returns

Next, I created two functions to help with signal creation and strategy testing:

calendar.strategy <- function(data, signal, universe = data$prices > 0) {

data$weight[] = NA

data$weight[] = ifna(universe & signal, F)

bt.run.share(data, do.lag = 0, trade.summary=T, clean.signal=T)

}

calendar.signal <- function(key.date, offsets = 0) {

signal = mlag(key.date, offsets[1])

for(i in offsets) signal = signal | mlag(key.date, i)

signal

}

# Trade on key.date

models$T0 = calendar.strategy(data, key.date)

# Trade next day after key.date

models$N1 = calendar.strategy(data, mlag(key.date,1))

# Trade two days next(after) key.date

models$N2 = calendar.strategy(data, mlag(key.date,2))

# Trade a day prior to key.date

models$P1 = calendar.strategy(data, mlag(key.date,-1))

# Trade two days prior to key.date

models$P2 = calendar.strategy(data, mlag(key.date,-2))

# Trade: open 2 days before the key.date and close 2 days after the key.date

signal = key.date | mlag(key.date,-1) | mlag(key.date,-2) | mlag(key.date,1) | mlag(key.date,2)

models$P2N2 = calendar.strategy(data, signal)

# same, but using helper function above

models$P2N2 = calendar.strategy(data, calendar.signal(key.date, -2:2))

strategy.performance.snapshoot(models, T)

strategy.performance.snapshoot(models, control=list(comparison=T), sort.performance=F)

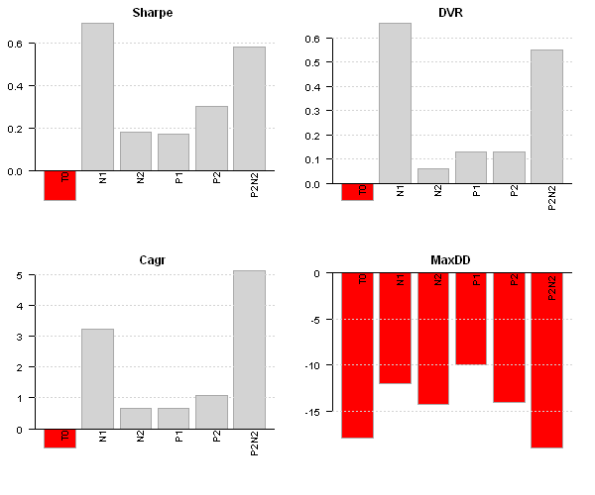

Above, T0 is a calendar strategy that buys on 30th and sells on 31st. I.e. position is only held on a month end day. P1 and P2 are two strategies that buy a day prior and two days prior correspondingly. N1 and N2 are two strategies that buy a day after and two days after correspondingly.

The N1 strategy, buy on 31st and sell on the 1st next month seems to be working best for SPY.

Finally, let’s look at the actual trades:

last.trades <- function(model, n=20, make.plot=T, return.table=F) {

ntrades = min(n, nrow(model$trade.summary$trades))

trades = last(model$trade.summary$trades, ntrades)

if(make.plot) {

layout(1)

plot.table(trades)

}

if(return.table) trades

}

last.trades(models$P2)

The P2 strategy enters position at the close 3 days before the month end and exits positions at the close 2 days before the month end. I.e. the performance is due to returns only 2 days before the month end.

With this post I wanted to show how easily we can study calendar strategy performance using the Systematic Investor Toolbox.

Next, I will demonstrate calendar strategy applications to variety of important dates.

To view the complete source code for this example, please have a look at the bt.calendar.strategy.month.end.test() function in bt.test.r at github.

Stochastic Oscillator

I came across the link to the John Ehlers paper: Predictive Indicators for Effective Trading Strategies, while reading the Dekalog Blog. John Ehlers offers a different way to smooth prices and incorporate the new filter into the oscillator construction. Fortunately, the EasyLanguage code was also provided and i was able to translate it into R.

Following are some results from the paper and test of John’s stochastic oscillator.

###############################################################################

# Super Smoother filter 2013 John F. Ehlers

# http://www.stockspotter.com/files/PredictiveIndicators.pdf

###############################################################################

super.smoother.filter <- function(x) {

a1 = exp( -1.414 * pi / 10.0 )

b1 = 2.0 * a1 * cos( (1.414*180.0/10.0) * pi / 180.0 )

c2 = b1

c3 = -a1 * a1

c1 = 1.0 - c2 - c3

x = c1 * (x + mlag(x)) / 2

x[1] = x[2]

out = x * NA

out[] = filter(x, c(c2, c3), method='recursive', init=c(0,0))

out

}

# Roofing filter 2013 John F. Ehlers

roofing.filter <- function(x) {

# Highpass filter cyclic components whose periods are shorter than 48 bars

alpha1 = (cos((0.707*360 / 48) * pi / 180.0 ) + sin((0.707*360 / 48) * pi / 180.0 ) - 1) / cos((0.707*360 / 48) * pi / 180.0 )

x = (1 - alpha1 / 2)*(1 - alpha1 / 2)*( x - 2*mlag(x) + mlag(x,2))

x[1] = x[2] = x[3]

HP = x * NA

HP[] = filter(x, c(2*(1 - alpha1), - (1 - alpha1)*(1 - alpha1)), method='recursive', init=c(0,0))

super.smoother.filter(HP)

}

# My Stochastic Indicator 2013 John F. Ehlers

roofing.stochastic.indicator <- function(x, lookback = 20) {

Filt = roofing.filter(x)

HighestC = runMax(Filt, lookback)

HighestC[1:lookback] = as.double(HighestC[lookback])

LowestC = runMin(Filt, lookback)

LowestC[1:lookback] = as.double(LowestC[lookback])

Stoc = (Filt - LowestC) / (HighestC - LowestC)

super.smoother.filter(Stoc)

}

First let’s plot the John’s stochastic oscillator vs traditional one.

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

tickers = spl('DG')

data = new.env()

getSymbols(tickers, src = 'yahoo', from = '1970-01-01', env = data, auto.assign = T)

for(i in ls(data)) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

bt.prep(data)

#*****************************************************************

# Setup

#*****************************************************************

prices = data$prices

models = list()

# John Ehlers Stochastic

stoch = roofing.stochastic.indicator(prices)

# 14 Day Stochastic

stoch14 = bt.apply(data, function(x) stoch(HLC(x),14)[,'slowD'])

#*****************************************************************

# Create plots

#******************************************************************

dates = '2011:10::2012:9'

layout(1:3)

plota(prices[dates], type='l', plotX=F)

plota.legend('DG')

plota(stoch[dates], type='l', plotX=F)

abline(h = 0.2, col='red')

abline(h = 0.8, col='red')

plota.legend('John Ehlers Stochastic')

plota(stoch14[dates], type='l')

abline(h = 0.2, col='red')

abline(h = 0.8, col='red')

plota.legend('Stochastic')

Next let’s code the trading rules as in Figure 6 and 8 in the John Ehlers paper: Predictive Indicators for Effective Trading Strategies

#*****************************************************************

# Code Strategies

#*****************************************************************

# Figure 6: Conventional Wisdom is to Buy When the Indicator Crosses Above 20% and

# To Sell Short when the Indicator Crosses below 80%

data$weight[] = NA

data$weight[] = iif(cross.up(stoch, 0.2), 1, iif(cross.dn(stoch, 0.8), -1, NA))

models$post = bt.run.share(data, clean.signal=T, trade.summary=T)

data$weight[] = NA

data$weight[] = iif(cross.up(stoch, 0.2), 1, iif(cross.dn(stoch, 0.8), 0, NA))

models$post.L = bt.run.share(data, clean.signal=T, trade.summary=T)

data$weight[] = NA

data$weight[] = iif(cross.up(stoch, 0.2), 0, iif(cross.dn(stoch, 0.8), -1, NA))

models$post.S = bt.run.share(data, clean.signal=T, trade.summary=T)

# Figure 8: Predictive Indicators Enable You to Buy When the Indicator Crosses Below 20% and

# To Sell Short when the Indicator Crosses Above 80%

data$weight[] = NA

data$weight[] = iif(cross.dn(stoch, 0.2), 1, iif(cross.up(stoch, 0.8), -1, NA))

models$pre = bt.run.share(data, clean.signal=T, trade.summary=T)

data$weight[] = NA

data$weight[] = iif(cross.dn(stoch, 0.2), 1, iif(cross.up(stoch, 0.8), 0, NA))

models$pre.L = bt.run.share(data, clean.signal=T, trade.summary=T)

data$weight[] = NA

data$weight[] = iif(cross.dn(stoch, 0.2), 0, iif(cross.up(stoch, 0.8), -1, NA))

models$pre.S = bt.run.share(data, clean.signal=T, trade.summary=T)

#*****************************************************************

# Create Report

#******************************************************************

strategy.performance.snapshoot(models, T)

Look’s like most profit comes from the long side.

Finally let’s visualize the timing of the signal for these strategies:

john.ehlers.custom.strategy.plot <- function(data, models, name,

main = name,

dates = '::',

layout = NULL # flag to indicate if layout is already set

) {

# John Ehlers Stochastic

stoch = roofing.stochastic.indicator(data$prices)

# highlight logic based on weight

weight = models[[name]]$weight[dates]

col = iif(weight > 0, 'green', iif(weight < 0, 'red', 'white'))

plota.control$col.x.highlight = col.add.alpha(col, 100)

highlight = T

if(is.null(layout)) layout(1:2)

plota(data$prices[dates], type='l', x.highlight = highlight, plotX = F, main=main)

plota.legend('Long,Short,Not Invested','green,red,white')

plota(stoch[dates], type='l', x.highlight = highlight, plotX = F, ylim=c(0,1))

col = col.add.alpha('red', 100)

abline(h = 0.2, col=col, lwd=3)

abline(h = 0.8, col=col, lwd=3)

plota.legend('John Ehlers Stochastic')

}

layout(1:4, heights=c(2,1,2,1))

john.ehlers.custom.strategy.plot(data, models, 'post.L', dates = '2013::', layout=T,

main = 'post.L: Buy When the Indicator Crosses Above 20% and Sell when the Indicator Crosses Below 80%')

john.ehlers.custom.strategy.plot(data, models, 'pre.L', dates = '2013::', layout=T,

main = 'pre.L: Buy When the Indicator Crosses Below 20% and Sell when the Indicator Crosses Above 80%')

As a homework, I encourage you to compare trading the John’s stochastic oscillator vs traditional one.

To view the complete source code for this example, please have a look at the john.ehlers.filter.test() function in bt.test.r at github.

Modeling Couch Potato strategy

I first read about the Couch Potato strategy in the MoneySense magazine. I liked this simple strategy because it was easy to understand and easy to manage. The Couch Potato strategy is similar to the Permanent Portfolio strategy that I have analyzed previously.

The Couch Potato strategy invests money in the given proportions among different types of assets to ensure diversification and rebalances the holdings once a year. For example the Classic Couch Potato strategy is:

- 1) Canadian equity (33.3%)

- 2) U.S. equity (33.3%)

- 3) Canadian bond (33.3%)

I highly recommend reading following online resources to get more information about the Couch Potato strategy:

- MoneySense

- Canadian Couch Potato

- AssetBuilder

Today, I want to show how you can model and monitor the Couch Potato strategy with the Systematic Investor Toolbox.

###############################################################################

# Load Systematic Investor Toolbox (SIT)

# https://systematicinvestor.wordpress.com/systematic-investor-toolbox/

###############################################################################

setInternet2(TRUE)

con = gzcon(url('http://www.systematicportfolio.com/sit.gz', 'rb'))

source(con)

close(con)

# helper function to model Couch Potato strategy - a fixed allocation strategy

couch.potato.strategy <- function

(

data.all,

tickers = 'XIC.TO,XSP.TO,XBB.TO',

weights = c( 1/3, 1/3, 1/3 ),

periodicity = 'years',

dates = '1900::',

commission = 0.1

)

{

#*****************************************************************

# Load historical data

#******************************************************************

tickers = spl(tickers)

names(weights) = tickers

data <- new.env()

for(s in tickers) data[[ s ]] = data.all[[ s ]]

bt.prep(data, align='remove.na', dates=dates)

#*****************************************************************

# Code Strategies

#******************************************************************

prices = data$prices

n = ncol(prices)

nperiods = nrow(prices)

# find period ends

period.ends = endpoints(data$prices, periodicity)

period.ends = c(1, period.ends[period.ends > 0])

#*****************************************************************

# Code Strategies

#******************************************************************

data$weight[] = NA

for(s in tickers) data$weight[period.ends, s] = weights[s]

model = bt.run.share(data, clean.signal=F, commission=commission)

return(model)

}

The couch.potato.strategy() function creates a periodically rebalanced portfolio for given static allocation.

Next, let’s back-test some Canadian Couch Potato portfolios:

#*****************************************************************

# Load historical data

#******************************************************************

load.packages('quantmod')

map = list()

map$can.eq = 'XIC.TO'

map$can.div = 'XDV.TO'

map$us.eq = 'XSP.TO'

map$us.div = 'DVY'

map$int.eq = 'XIN.TO'

map$can.bond = 'XBB.TO'

map$can.real.bond = 'XRB.TO'

map$can.re = 'XRE.TO'

map$can.it = 'XTR.TO'

map$can.gold = 'XGD.TO'

data <- new.env()

for(s in names(map)) {

data[[ s ]] = getSymbols(map[[ s ]], src = 'yahoo', from = '1995-01-01', env = data, auto.assign = F)

data[[ s ]] = adjustOHLC(data[[ s ]], use.Adjusted=T)

}

#*****************************************************************

# Code Strategies

#******************************************************************

models = list()

periodicity = 'years'

dates = '2006::'

models$classic = couch.potato.strategy(data, 'can.eq,us.eq,can.bond', rep(1/3,3), periodicity, dates)

models$global = couch.potato.strategy(data, 'can.eq,us.eq,int.eq,can.bond', c(0.2, 0.2, 0.2, 0.4), periodicity, dates)

models$yield = couch.potato.strategy(data, 'can.div,can.it,us.div,can.bond', c(0.25, 0.25, 0.25, 0.25), periodicity, dates)

models$growth = couch.potato.strategy(data, 'can.eq,us.eq,int.eq,can.bond', c(0.25, 0.25, 0.25, 0.25), periodicity, dates)

models$complete = couch.potato.strategy(data, 'can.eq,us.eq,int.eq,can.re,can.real.bond,can.bond', c(0.2, 0.15, 0.15, 0.1, 0.1, 0.3), periodicity, dates)

models$permanent = couch.potato.strategy(data, 'can.eq,can.gold,can.bond', c(0.25,0.25,0.5), periodicity, dates)

#*****************************************************************

# Create Report

#******************************************************************

plotbt.custom.report.part1(models)

I have included a few classic Couch Potato portfolios and the Canadian version of the Permanent portfolio. The equity curves speak for themselves: you can call them by the fancy names, but in the end all variations of the Couch Potato portfolios performed similar and suffered a huge draw-down during 2008. The Permanent portfolio did a little better during 2008 bear market.

Next, let’s back-test some US Couch Potato portfolios:

#*****************************************************************

# Load historical data

#******************************************************************

tickers = spl('VIPSX,VTSMX,VGTSX,SPY,TLT,GLD,SHY')

data <- new.env()

getSymbols(tickers, src = 'yahoo', from = '1995-01-01', env = data, auto.assign = T)

for(i in ls(data)) data[[i]] = adjustOHLC(data[[i]], use.Adjusted=T)

# extend GLD with Gold.PM - London Gold afternoon fixing prices

data$GLD = extend.GLD(data$GLD)

#*****************************************************************

# Code Strategies

#******************************************************************

models = list()

periodicity = 'years'

dates = '2003::'

models$classic = couch.potato.strategy(data, 'VIPSX,VTSMX', rep(1/2,2), periodicity, dates)

models$margarita = couch.potato.strategy(data, 'VIPSX,VTSMX,VGTSX', rep(1/3,3), periodicity, dates)

models$permanent = couch.potato.strategy(data, 'SPY,TLT,GLD,SHY', rep(1/4,4), periodicity, dates)

#*****************************************************************

# Create Report

#******************************************************************

plotbt.custom.report.part1(models)

The US Couch Potato portfolios also suffered huge draw-downs during 2008. The Permanent portfolio hold it ground much better.

It has been written quite a lot about Couch Potato strategy, but looking at different variations I cannot really see much difference in terms of perfromance or draw-downs. Probably that is why in the last few years, I have seen the creation of many new ETFs to address that in one way or another. For example, now we have tactical asset allocation ETFs, minimum volatility ETFs, income ETFs with covered calls overlays.

To view the complete source code for this example, please have a look at the bt.couch.potato.test() function in bt.test.r at github.

Some additional references from the Canadian Couch Potato blog that are worth reading:

Permanent Portfolio – Transaction Cost and better Risk Parity

I want to address comments that were asked in my last post, Permanent Portfolio – Simple Tools, about Permanent Portfolio strategy. Specifically:

- The impact of transaction costs on the perfromance and

- Create a modified version of risk allocation portfolio that distributes weights across 3 asset classes: stocks(SPY), gold(GLD), and treasuries(TLT), and only invests into cash(SHY) to fill the residual portfolio exposure once we scale the SPY/GLD/TLT portfolio to the target volatility

The first point is easy, to incorporate the transaction cost into your back-test just add commission=0.1 parameter to the bt.run.share() function call.For example, to see the dollar allocation strategy perfromance assuming 10c a share commission, use following code:

# original strategy models$dollar = bt.run.share(data, clean.signal=F) # assuming 10c a share commissions models$dollar = bt.run.share(data, commission=0.1, clean.signal=F)

The second point is a bit more work. First, let’s allocate risk across only to 3 asset classes: stocks(SPY), gold(GLD), and treasuries(TLT). Next, let’s scale the SPY/GLD/TLT portfolio to the 7% target volatility. And finally, let’s allocate to cash(SHY) the residual portfolio exposure.

#***************************************************************** # Risk Weighted: allocate only to 3 asset classes: stocks(SPY), gold(GLD), and treasuries(TLT) #****************************************************************** ret.log = bt.apply.matrix(prices, ROC, type='continuous') hist.vol = sqrt(252) * bt.apply.matrix(ret.log, runSD, n = 21) weight.risk = weight.dollar / hist.vol weight.risk$SHY = 0 weight.risk = weight.risk / rowSums(weight.risk) data$weight[] = NA data$weight[period.ends,] = weight.risk[period.ends,] models$risk = bt.run.share(data, commission=commission, clean.signal=F) #***************************************************************** # Risk Weighted + 7% target volatility #****************************************************************** data$weight[] = NA data$weight[period.ends,] = target.vol.strategy(models$risk, weight.risk, 7/100, 21, 100/100)[period.ends,] models$risk.target7 = bt.run.share(data, commission=commission, clean.signal=F) #***************************************************************** # Risk Weighted + 7% target volatility + SHY #****************************************************************** data$weight[] = NA data$weight[period.ends,] = target.vol.strategy(models$risk, weight.risk, 7/100, 21, 100/100)[period.ends,] cash = 1-rowSums(data$weight) data$weight$SHY[period.ends,] = cash[period.ends] models$risk.target7.shy = bt.run.share(data, commission=commission, clean.signal=F)

The modified version of risk allocation portfolio performs well relative to other portfolios even after incorporating the 10c transaction cost.

To view the complete source code for this example, please have a look at the bt.permanent.portfolio3.test() function in bt.test.r at github.